Angular over NFS

Of Old Times…

A long time ago (actually only about 30 years) it was common in research institutes to provide work stations (for example Sparc servers) to the researchers and make their home directories (located on a central server) available on every work station by mounting them via NFS. That way, a user could use any work station and always see the same files. Very practical indeed. The downside is that the home directory is not local which is to say that each access requires network communication. However, for normal work this was fast enough. In those cases where extra processing speed was needed it was often possible to log into the server (which also had the home directories “mounted” in the same location) and start IO bound processes there.

It was only natural that I chose the same setup for my computers at home featuring one server with the home directories on it and — for a while — up to four clients linked to the server.

Life was OK with this setup for a long time. In recent years, however, I ran into performance problems using the

browser which seemed to get more and more sluggish. The problem was the ever-increasing size of web pages and the caching

of all the small files belonging to them. They are usually kept in the subdirectory ~/.cache of the home directory

represented by ~. This requires every single file which is to cached to be transferred over the local network to save

it and later transfer it back to read it again. Even in the case of a fast local network this too time-consuming to be

comfortable.

The solution was pretty straight forward: Keep this directory in a client-local filesystem. The performance improved quite substantially after that.

IMPORTANT: It is very essential to restrict the local directories to the cache only. Another candidate with a lot

of small files would be ~/.config but this would result in keeping non-synchronized copies of the configurations on

the clients which is NOT a good idea. The cache directory on the other hand is fine since the local copies are never

used to permanently store a state but only to retrieve them more quickly than over the internet.

The Modern Network IO Desaster

A while ago I took part in a training on modern web clients and came back home highly motivated to try this new

technology on my pet project LittleBrother. Naively,

I setup Angular in

my home directory and used npm install to install the Javascript libraries. Since I did the training on a pretty fast

Apple Macbook I was used to run times between 10 and 20 seconds. After hitting enter I was confused: The system seemed

to hang. Nothing was really happening or if there was something happening it was in extreme slow motion. I interrupted

the command again and again and tried to find the error in the setup, but there was no obvious error. After a while it

dawned on me that the tool npm was born in the time of fast notebooks with local SSD filesystems. There

was no need to optimize for speed as far as IO access to the filesystem was concerned. Depending on

how much IO activity was triggered by the installation this could result in a substantial impact on performance in case

of my NFS mounted home directory.

First Measurements

I did the same npm install on the server directly and timed to the command:

> time npm install

real 0m26,283s

user 0m20,962s

sys 0m4,496s

The total run time of 26 seconds was realistic since my server is not a fast as a modern MacBook. This confirmed that the tool was actually working!

So, what is the footprint of my — so far empty — Angular project?

> du -sh node_modules/

405M node_modules/

> find ./node_modules/ -type d -ls|wc -l

3578

> find ./node_modules/ -type f -ls|wc -l

40374

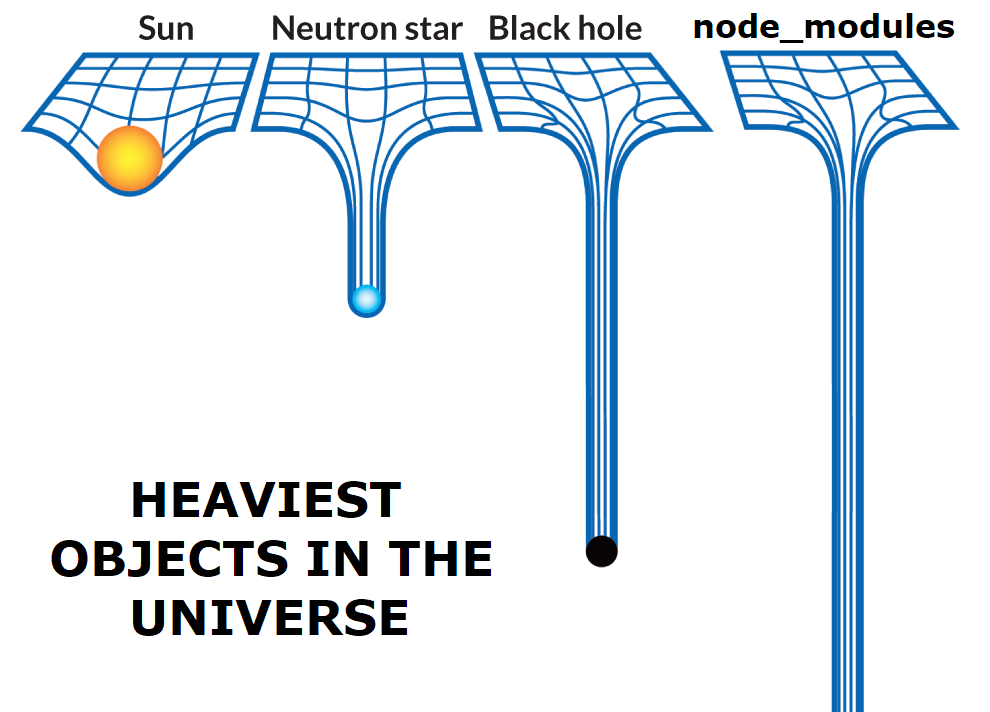

A whopping 405 Megabytes of data in 3,578 directories and 40,374 files! This is probably one of the reasons

for the following meme of the node_modules directory being among the Heaviest Objects in the Universe:

No wonder that the npm over NFS never came back! Still, I ran the same timing command over the NFS and obtained the

following result (after, of course, deleting the node_modules directory):

> time npm install

real 29m12,161s

user 1m7,587s

sys 0m13,837s

It is obvious that the process spends most of the time waiting for network IO. Actually, the user run time of 67 seconds is only a little over twice the time without network transfer. The waiting time is about 28 MINUTES, though. As a result the wall clock execution time is 67 times longer.

Of course, working with such a delay is out of the question, although the command npm install is rather rare. What

about the server process ng serve during development? Does the network make a big difference there? Let’s see…

> ng serve

✔ Browser application bundle generation complete.

Initial Chunk Files | Names | Raw Size

vendor.js | vendor | 2.04 MB |

polyfills.js | polyfills | 314.30 kB |

styles.css, styles.js | styles | 209.43 kB |

main.js | main | 49.68 kB |

runtime.js | runtime | 6.54 kB |

| Initial Total | 2.61 MB

Build at: 2023-04-12T19:02:09.164Z - Hash: 125135a897d82e29 - Time: 28976ms

** Angular Live Development Server is listening on localhost:4200, open your browser on http://localhost:4200/ **

✔ Compiled successfully.

The initial startup takes 29 seconds. This is kind of slow but bearable since it’s only done once for a coding session. What about a typical modification of a single file?

✔ Browser application bundle generation complete.

Initial Chunk Files | Names | Raw Size

main.js | main | 49.68 kB |

runtime.js | runtime | 6.54 kB |

3 unchanged chunks

Build at: 2023-04-12T19:05:59.999Z - Hash: 16d77fb85d4a44ed - Time: 1124ms

✔ Compiled successfully.

A little more than one second is definitely OK. But we can do better…

A Quick Fix

As mentioned before one way to improve performance is to start relevant processes on the server. This is easily done,

but we need to make a tiny change to the command line call since the default settings will make the ng server process

to localhost only. Instead, we have to call ng with the parameter --host:

ng serve --host casimir.chaos

Warning: This is a simple server for use in testing or debugging Angular applications

locally. It hasn't been reviewed for security issues.

Binding this server to an open connection can result in compromising your application or

computer. Using a different host than the one passed to the "--host" flag might result in

websocket connection issues. You might need to use "--disable-host-check" if that's the

case.

✔ Browser application bundle generation complete.

Initial Chunk Files | Names | Raw Size

vendor.js | vendor | 2.04 MB |

polyfills.js | polyfills | 314.30 kB |

styles.css, styles.js | styles | 209.43 kB |

main.js | main | 49.68 kB |

runtime.js | runtime | 6.54 kB |

| Initial Total | 2.61 MB

Build at: 2023-04-13T19:27:43.599Z - Hash: 29e0f82fe0ed1af1 - Time: 3785ms

** Angular Live Development Server is listening on casimir.chaos:4200, open your browser on http://casimir.chaos:4200/ **

So, that’s about four seconds or about 7 times as fast. A change of one file is handled in 1.5 seconds initially, but improves to about 0.3 seconds later which is about 3 times as fast.

✔ Compiled successfully.

✔ Browser application bundle generation complete.

Initial Chunk Files | Names | Raw Size

main.js | main | 49.68 kB |

runtime.js | runtime | 6.54 kB |

3 unchanged chunks

Build at: 2023-04-13T19:28:02.486Z - Hash: b19505ae79ee53a3 - Time: 1575ms

✔ Compiled successfully.

✔ Browser application bundle generation complete.

5 unchanged chunks

Build at: 2023-04-13T19:32:15.499Z - Hash: d5f1761ae35390a4 - Time: 351ms

Chat-GPT

Since everybody asks Chat GPT these days I did, too: Why is npm install slow on nfs mounted file systems?

This is the answer:

NPM (Node Package Manager) installs packages by downloading them from the internet and then installing them locally on your machine. When you install packages with NPM on an NFS (Network File System) mounted file system, the process can be slow due to various reasons:

Network latency: NFS relies on the network to transfer data between the server and client. If the network connection is slow or has high latency, it can significantly slow down the installation process.

File system performance: The performance of NFS file systems can be slower than local file systems due to various

factors, such as network congestion, network hardware, and server load.Disk I/O: When NPM installs packages, it writes files to the file system. NFS file systems can have slower disk I/O performance than local file systems, which can lead to slower installation times.

Package size: The size of the package being installed can also impact the installation time. Larger packages take longer to download and install, which can be more noticeable on slower NFS file systems.

To improve the performance of NPM on an NFS mounted file system, you can try the following:

Use a local file system: If possible, install packages on a local file system rather than an NFS mounted file system. This will eliminate the network latency and file system performance issues.

Optimize NFS performance: You can optimize the performance of NFS by tuning the NFS settings and optimizing the network configuration.

Use caching: You can use caching tools like npm-cache or Artifactory to cache packages locally. This will reduce the need to download packages from the internet and speed up the installation process.

Use a faster network connection: If possible, use a faster network connection to improve the performance of NFS.

In general, it’s best to avoid installing NPM packages on NFS mounted file systems, as they can be slower than local file systems.

So, let’s see what we make of the suggestions. The first suggestion we have already heeded above by starting processes on the server.

The fourth suggestion is definitely a valid guess.

Network latency and/or throughput could definitely be a problem. However, my local network is kind of OK:

> ping casimir.chaos

PING casimir.chaos (192.168.1.1) 56(84) bytes of data.

64 bytes from casimir.chaos (192.168.1.1): icmp_seq=1 ttl=64 time=0.276 ms

64 bytes from casimir.chaos (192.168.1.1): icmp_seq=2 ttl=64 time=0.271 ms

64 bytes from casimir.chaos (192.168.1.1): icmp_seq=3 ttl=64 time=0.279 ms

A latency of a quarter of a second is not bad. The throughput was measured using iperf3:

> iperf3 -c casimir.chaos

Connecting to host casimir.chaos, port 5201

[ 5] local 192.168.1.3 port 43424 connected to 192.168.1.1 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 113 MBytes 951 Mbits/sec 0 444 KBytes

[ 5] 1.00-2.00 sec 111 MBytes 928 Mbits/sec 0 444 KBytes

[ 5] 2.00-3.00 sec 111 MBytes 932 Mbits/sec 0 444 KBytes

[ 5] 3.00-4.00 sec 111 MBytes 931 Mbits/sec 0 444 KBytes

[ 5] 4.00-5.00 sec 111 MBytes 932 Mbits/sec 0 444 KBytes

[ 5] 5.00-6.00 sec 111 MBytes 933 Mbits/sec 0 444 KBytes

[ 5] 6.00-7.00 sec 111 MBytes 934 Mbits/sec 0 444 KBytes

[ 5] 7.00-8.00 sec 111 MBytes 930 Mbits/sec 0 444 KBytes

[ 5] 8.00-9.00 sec 112 MBytes 935 Mbits/sec 0 444 KBytes

[ 5] 9.00-10.00 sec 110 MBytes 920 Mbits/sec 0 444 KBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 1.09 GBytes 933 Mbits/sec 0 sender

[ 5] 0.00-10.00 sec 1.08 GBytes 931 Mbits/sec receiver

iperf Done.

Since it’s a Gigabit network connection a throughput of 933 Megabit/sec is probably as fast as it can get. So, there is very little room for improvement there, at least for large files. In principle, I could try a 10 Gigabit network but the hardware is still more expensive, and I may have to rewire the house which would be a hassle.

The second and the third causes are basically the first cause again, just rephrased. The fourth cause is true for all installations, not just for NFS. Therefore, it does not help.

Maybe, we can dive into the NFS options

Question to Chat APT: Which nfs settings should be tuned?

There are several NFS settings that can be tuned to improve the performance of NFS mounted file systems. Here are some common settings that can be adjusted:

Increase the number of NFS server threads: The number of threads on the NFS server can be increased to handle more concurrent requests. This can help to reduce the queue length and improve the response time.

Increase the size of the NFS read and write buffers: The default buffer sizes for NFS reads and writes can be increased to improve the throughput of NFS operations.

Adjust the NFS timeout settings: The timeout settings for NFS can be adjusted to improve the reliability of NFS operations. For example, increasing the timeout values can help to reduce the number of dropped or retransmitted packets.

Enable jumbo frames: Jumbo frames can be enabled to reduce the overhead of NFS operations by reducing the number of packets required to transmit data.

Adjust the NFS cache settings: The NFS cache settings can be adjusted to control the amount of data cached on the NFS client. Increasing the cache size can help to reduce the number of NFS requests sent to the server.

Adjust the network settings: The network settings can be adjusted to improve the performance of NFS. For example, increasing the size of the TCP window can help to improve the throughput of NFS operations.

It’s important to note that the optimal NFS settings may vary depending on the specific environment and workload. It’s recommended to test and adjust the settings based on the workload and performance metrics to find the best configuration for your setup.

Let’s go through these suggestions…

Increase the Number of Threads

The default setting is to have eight threads for NFS which is definitely sufficient since my home setup is that

only a few clients (npm) are requesting data from the server in a sequential fashion. If npm could be put

into a parallel execution mode, this would be a different story, though.

Adjust the Timeout Settings

Timeout settings are relevant in a setup in which the network connection as such is unreliable. With a single direct link between client and server in the room next door we cannot expect any improvement from adjusting timeouts.

Enable Jumbo Frames

Looking at the number files (40374) and the overall memory size (405 MByte) we get an average file size of 10500 Bytes. My standard settings for MTU is 1500 Bytes. This would result in about each average file to be split in 7 packets to be transferred. Raising the size to a Jumbo Frame with 9000 Bytes as suggested would decrease this number to 2. This sounds significant at first, but we have to be careful: The average size is probably not as important as the effective size distribution.

The question is: How many files are actually affected by the change of MTU? This can only be determined by creating a

histogram of the file size. The first step is to get a list of all the files and their sizes.

This can be done using find:

find . -type f -ls > file.lst

The file filel.lst will contain entries like this:

32246805 4 -rw-r--r-- 1 mr users 160 Apr 17 22:24 ./typed-assert/jest.config.js

32246871 16 -rw-r--r-- 1 mr users 12987 Apr 17 22:27 ./typed-assert/API.md

3411293 4 -rw-r--r-- 1 mr users 3106 Apr 17 22:27 ./typed-assert/build/index.d.ts

...

We will have to extract the sizes in the seventh column and put them into bins. It would be nice to have one

of the bins exactly at the maximum size of one network frame. So, let’s find out what the maximum size is. For

this we use ping with a given payload packet size:

mr@ute:~$ ping casimir.chaos -s 1472 -M do

PING casimir.chaos (192.168.1.1) 1472(1500) bytes of data.

1480 bytes from casimir.chaos (192.168.1.1): icmp_seq=1 ttl=64 time=0.400 ms

--- casimir.chaos ping statistics ---

2 packets transmitted, 2 received, 0% packet loss, time 1015ms

rtt min/avg/max/mdev = 0.358/0.379/0.400/0.021 ms

mr@ute:~$ ping casimir.chaos -s 1473 -M do

PING casimir.chaos (192.168.1.1) 1473(1501) bytes of data.

ping: local error: message too long, mtu=1500

--- casimir.chaos ping statistics ---

2 packets transmitted, 0 received, +2 errors, 100% packet loss, time 1004ms

As we can see ping is able to transmit 1472 bytes with the given MTU 1500 but not 1473 bytes. So, I choose

one of the upper boundaries of the bins to be 1472 bytes which is 184 bytes. The bin sizes will be logarithmic

with the actual smallest bin size at an eighth of the 1472 bytes. The gawk script below will do the work for us:

BEGIN {

MinFileSize = 1472.0 / 8.0;

Count = 0;

}

{

file_size = $7;

bin = int(log(file_size / MinFileSize) / log(2));

if (bin < 0) {

# everything smaller than the minimum file size will end up in the smallest bin

bin = 0;

}

if (bin in hist) {

hist[bin] += 1

} else {

hist[bin] = 1

}

Count++;

}

END {

for (h in hist) {

max_size_in_bin = exp(h*log(2)) * MinFileSize

printf "files <= %i bytes: %i %.1f%%\n", max_size_in_bin, hist[h], 100 * hist[h] / Count

}

}

When we call it

gawk -f ./file-size-histogram.awk file.lst

the result is as follows:

files <= 184 bytes: 15188 37.6%

files <= 368 bytes: 4892 12.1%

files <= 736 bytes: 6159 15.3%

files <= 1471 bytes: 6020 14.9%

files <= 2943 bytes: 3727 9.2%

files <= 5888 bytes: 2268 5.6%

files <= 11775 bytes: 1050 2.6%

files <= 23551 bytes: 560 1.4%

files <= 47103 bytes: 226 0.6%

files <= 94207 bytes: 148 0.4%

files <= 188416 bytes: 82 0.2%

files <= 376832 bytes: 25 0.1%

files <= 753663 bytes: 11 0.0%

files <= 1507327 bytes: 6 0.0%

files <= 3014655 bytes: 3 0.0%

files <= 6029311 bytes: 10 0.0%

This means that 79.9% of all files can be transferred in a single one of the normal frames. The Jumbo Frames would only be beneficial for 20% of files. Assuming Pareto and given the fact that the throughput for larger files is pretty efficient (see above) it is very likely that Jumbo frames will not really help.

Increase the Size of NFS and Write Buffers

The default read size and write size of the NFS mount is 16 kilobytes. Looking at the file size histogram (see above) again one finds that 96.3% of all files will fit into a single buffer. Again, we cannot expect any improvement from tweaking the NFS buffers.

Adjust the NFS Cache Settings

Adjusting cache parameters is a little more extensive than expected. This page has been very helpful:

https://www.avidandrew.com/understanding-nfs-caching.html. Comparing the suggested parameter combination in

the article to the current status quo in my system I found one major difference: the server in the setup

apparently used the sync setting which is safer but also considerable slower than the async setting. The

author says:

Thus, if you use async on the server side, the data will be confirmed to be written as soon as it hits the server’s RAM. In the case of a power failure, this data would be lost. Conversely, sync waits for the data to be written to the disk or other stable storage (and confirmed) before returning a success. It is clear that sync is the appropriate option to use on the server side.

He does not recommend the async setting at all. But let’s try it on the client:

> time npm install

real 0m30,941s

user 0m19,396s

sys 0m10,863s

Really? This is just 4 seconds (or about 10%) slower than the server-local call! So with the asynch option active

the NFS client feels like having local storage. It’s amazing. The question is how severe the safety-issue will be.

Adjust the Network Settings

This is a little too unspecific to be really helpful. I would assume that most of the suggestions above will cover this point.

Summary

Starting with an innocent attempt to do some Angular development on my client-server-base home setup

I ran into severe problems. What at first looked like a bug in the npm package manager or my local setup turned

out to be a systematic performance issue caused by the NFS mount I use for my home directory.

I looked into the problem and found out that there is a quick work-around by moving the actual work onto the server

hosting the home directory. In my specific setup with the server being a regular Linux system supporting logins

this did not entail any major disadvantages. However, my development work would not be “transparent” anymore which

is to say that I would have to some specifically different for npm than I do for just about everything else.

Next, I decided to ask GPT for possible causes of the issue and also for potential mitigations. GPT gave good

answers although most of them were not applicable to my setup. However, the one hint regarding NFS cache settings

really made a difference yielding an enormous performance boost. But this is AFTER I googled

again for specific cache settings since the APT answer was not detailed enough. For the time being, I’m accepting

the additional safety risc resulting from the async setting and see what happens. Hopefully, I will not regret

my decision.

It’s not only npm which is blazingly fast now. It’s also Firefox which heavily uses local

files in the mounted home directory. Its startup used to be very sluggish especially the first one after login. Now

it is almost instantaneous.